Getting started with real-time 3D

Data maturity

Leveraging your existing 3D models for use in real-time 3D applications can range in difficulty, depending on the quality of your data (and your metadata), your target applications and devices, and your technical expertise. While it’s easy to access the tools and start experimenting with them, or even complete a one-off project, developing scalable workflows is another matter.

“If you want to really take advantage of your CAD and PLM data, you need to put some effort in it. Then you can do some amazing, powerful things,” Axel Jacquet, technical product manager at Unity, told engineering.com.

Franck agrees, acknowledging that “it’s a tricky process to update old models and pipelines.” But like Axel, he believes that the investment is well worth the cost and can “give better reactivity to any company when that transition to a metadata-driven pipeline is done.”

For newcomers to real-time 3D, the takeaway is positive: there’s little harm in trying it and much reward if you’re willing to put in the effort. The level of effort depends on several factors and the most important is a concept that Brad calls data maturity. Mature data is well-organized and replete with metadata that can be used to automate data optimization. Immature data is scattered across multiple systems and lacking in metadata. It’s a spectrum and it’s important to understand where on it your company lies.

“There are levels of automation there,” Brad says. “If the data is not mature enough, we can’t really unlock a full automation pipeline. But we could do anywhere from 50 percent and then maybe there’s a bit of manual work left.”

Even if your data is not yet mature enough to unlock full automation, you can always improve it for new models by ensuring the proper metadata is in place. In fact, it’s never too early to establish best practices and repeatable patterns for naming conventions and metadata use.

“Start annotating data at that design stage,” Brad says. “It has this knock-on effect. You might not want to add an extra 10 minutes to the designer to add metadata but when you start to understand that 10 minutes here saves thousands of hours down the end, over repetition, that’s where you start to see that payoff.”

Understanding your target application and platform

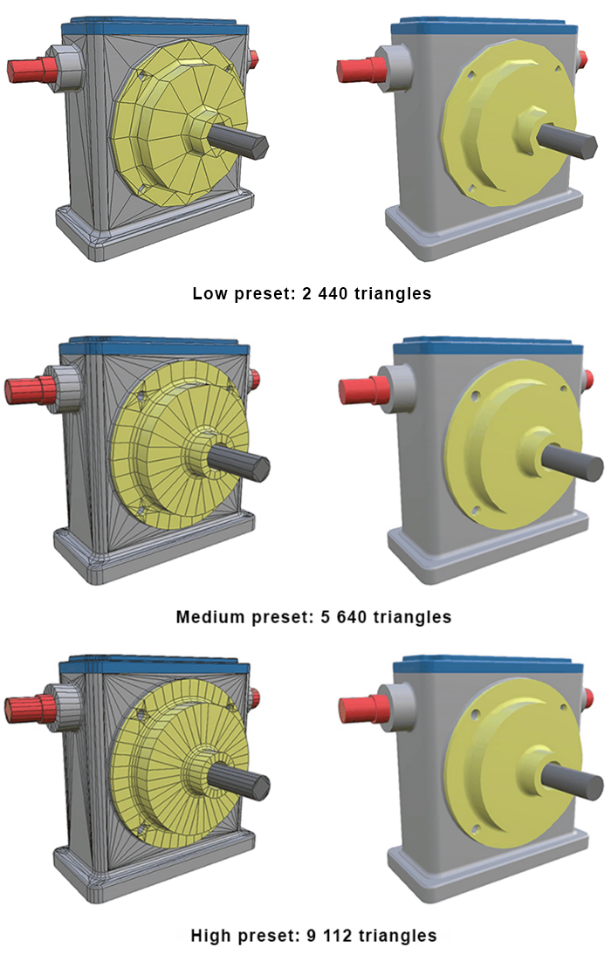

The optimal form of real-time 3D data depends on the end use case—both the application and the device that will power it. A 3D model viewer on a smartphone will have different requirements from a factory simulation running on a high-end workstation. To create an effective real-time 3D experience, you need to understand both the hardware and software constraints.

In terms of hardware, Axel says that looking up the specs of your target device (e.g., the Meta Quest 3 VR headset) is a good place to start. With a bit of trial and error, you’ll figure out the best way to optimize your models in terms of mesh size, part merging, and the other techniques described in this whitepaper. Pixyz may soon include hardware presets that make optimizing for a target device even easier.

In terms of software, it’s also crucial to understand what end-users will do with your models. For instance, you can’t merge every part of a car assembly if you expect users to open the door and you can’t remove parts like the engine if you expect users to lift the hood. Your approach to optimizing any CAD model should account for its role in the real-time application.

“You need to know what your final goal is, what your final scenario is, and from there you will be able to find a good recipe,” Axel says.

Some organizations like to start out with what Axel calls a “150 percent model,” a master version of the data with as much detail as possible. “It’s really the perfect digital twin of their actual product,” he says. This model can be used as the starting point for any other real-time 3D application. With nothing left to add, it’s just a matter of deciding what to remove for any given application and device.

“To me that’s the perfect way to go, because you need to manage one big database of perfect data and then you can create some export or optimization scenarios to push to your channels,” Axel says.

Of course, whether the 150 percent route is viable depends on a company’s goals, culture and level of data maturity. There are many valid approaches to optimizing real-time 3D data but the most effective ones all make use of automation to some degree.

“Pixyz lets a company optimize data on demand, giving reactivity and agile development. With scripting, you can automate most of it to adapt to your needs,” Franck says.

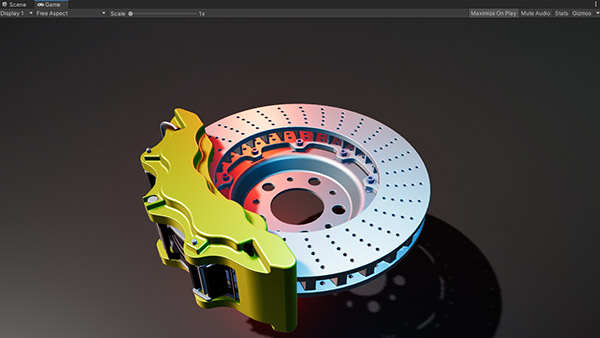

In an age of augmented reality (AR), virtual reality (VR) and mixed reality (MR), where everything from flight simulators to automotive displays to product ads are rendered in interactive 3D, engineering companies are investing in immersive, real-time 3D experiences that differentiate their brand and connect with their customers.

In an age of augmented reality (AR), virtual reality (VR) and mixed reality (MR), where everything from flight simulators to automotive displays to product ads are rendered in interactive 3D, engineering companies are investing in immersive, real-time 3D experiences that differentiate their brand and connect with their customers.